Queues allow you to limit the number of parallel queries and the memory allocated to them, depending on the user or rules.

#REDSHIFT SORTKEY FULL#

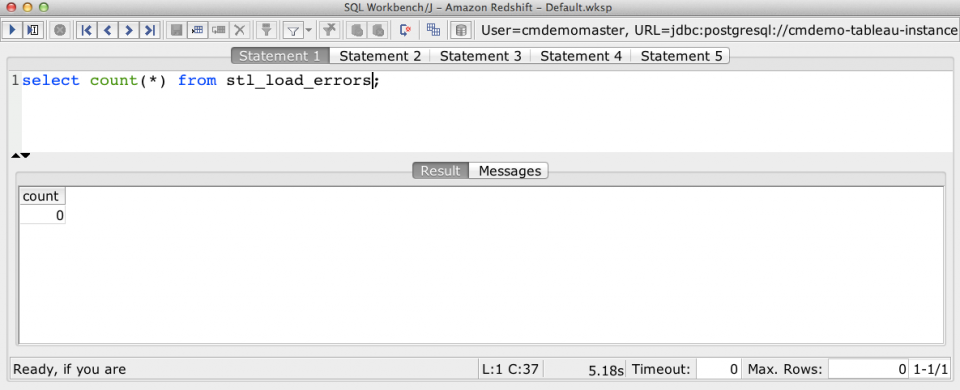

It’s bad, but Redshift without queue management can be even worse (numerous disk full error and slow queries). Sometimes your queries are blocked by the “queues” aka “Workload Management” (WLM). In other cases, this “terminate” command is not enough and you have to … Reboot the cluster. In some cases, the query can be slow to revert (as Redshift needs to rollback some tasks). To unlock the item, you can either wait, or delete the query by doing: SELECT pg_terminate_backend( your_pid_here) If “lock_mode” is “AccessExclusiveLock”, you have a locked item. To identify those locks, you can execute this query: SELECT * FROM svv_transactions

Doing so, it prevent queries from accessing to those tables. Some queries (like DROP or TRUNCATE) need an “exclusive access” to tables. There are 4 possible causes: locks, bad queues, architecture, bad performance. You can see that on Dec-30 at 2:40 (ETL scheduling), we had more waiting time than query execution (reading + writing time). Read the “query plan” to know if there is a data redistribution:.When you JOIN data coming from Spectrum, use a temporary table with Distkey to avoid data redistribution from a huge Spectrum table.No nested SELECT, they don’t remember the Distkey.Do regular Vacuum and Analyze, so Redshift can use the Distkeys.For example, if you have several tables with client_id and you know you will make JOIN with it, use it as the Distkey. Use Distkeys, which indicates how your data are split between your nodes. Avoid data redistribution between nodes that multiplies the volume of your query SEVERAL times.Limit the source tables with “where” filters.Never use select *, take only the column you need.Don’t use large varchar columns (VARCHAR > 256) for “join” as the keys could be put in memory for faster join, which can overload memory.get max varchar size of your_column SELECT max(octet_length(your_column)) FROM your_table Query optimization for volume handling You can use the following query to know the max varchar size of a column: Reduce at maximum the varchar columns as they are responsible for most of your disk space use.Remove tables that are never used (not scanned since 30 days).If it’s a varchar column, encode it as ZSTD. ENCODE every column (except sortkeys) as AZ64.As it is a fake resizing (it redistributes the slice, not the data, which can induce skewness), go back to your previous configuration after doing it. If you need temporary space, you can do an elastic resizing of your cluster to get more space.Choose classic resizing if you add some nodes. If you are above it and there is nothing to reduce it, you need more nodes. Good practice: 66% of disk used by data when there is no Swap.

Unload as Parquet the “old” data into S3. Ask your teams what data they need on a regular basis, and what data are considered “old”. Use Spectrum to externalize historical data.To identify the tables that need your attention the most: SELECT “table”, size, tbl_rows FROM svv_table_info ORDER BY size DESC Architecture for volume management

#REDSHIFT SORTKEY HOW TO#

We will see how to avoid this through volume management. All the disk was used, causing a massive crash. What did happen? There was not enough memory space, so the query used the disk to store the temporary needed data. Fun fact: the user often thinks he is the source of the problem and keeps it to himself, ashamed. So, you can expect to have every user coming to you to know what did happen. When disk hits more than 100%, every query is killed (not only yours).

0 kommentar(er)

0 kommentar(er)